Chrome AI: Built-in Captions on the fly

Chrome AI: Built-in Captions on the fly

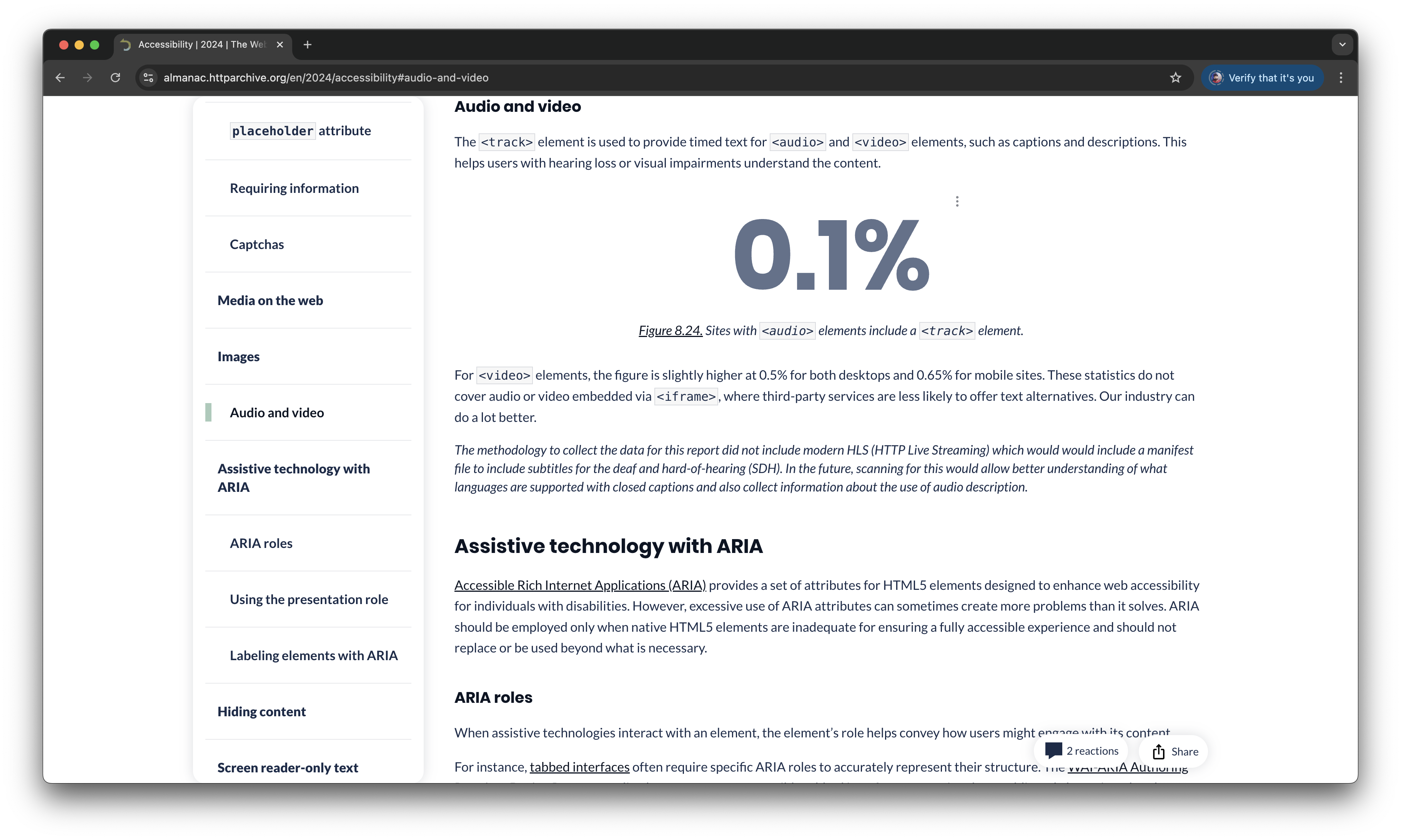

Only 0.5% of web videos include a <track> tag (source: The Web Almanac by HTTP Archive 2024 Report), leaving a majority of online video content inaccessible for individuals who rely on captions. This project proposes a built-in UI option in video elements that enables browsers to auto-generate captions dynamically when a <track> element is missing. This solution aims to improve web accessibility without requiring explicit authoring changes.

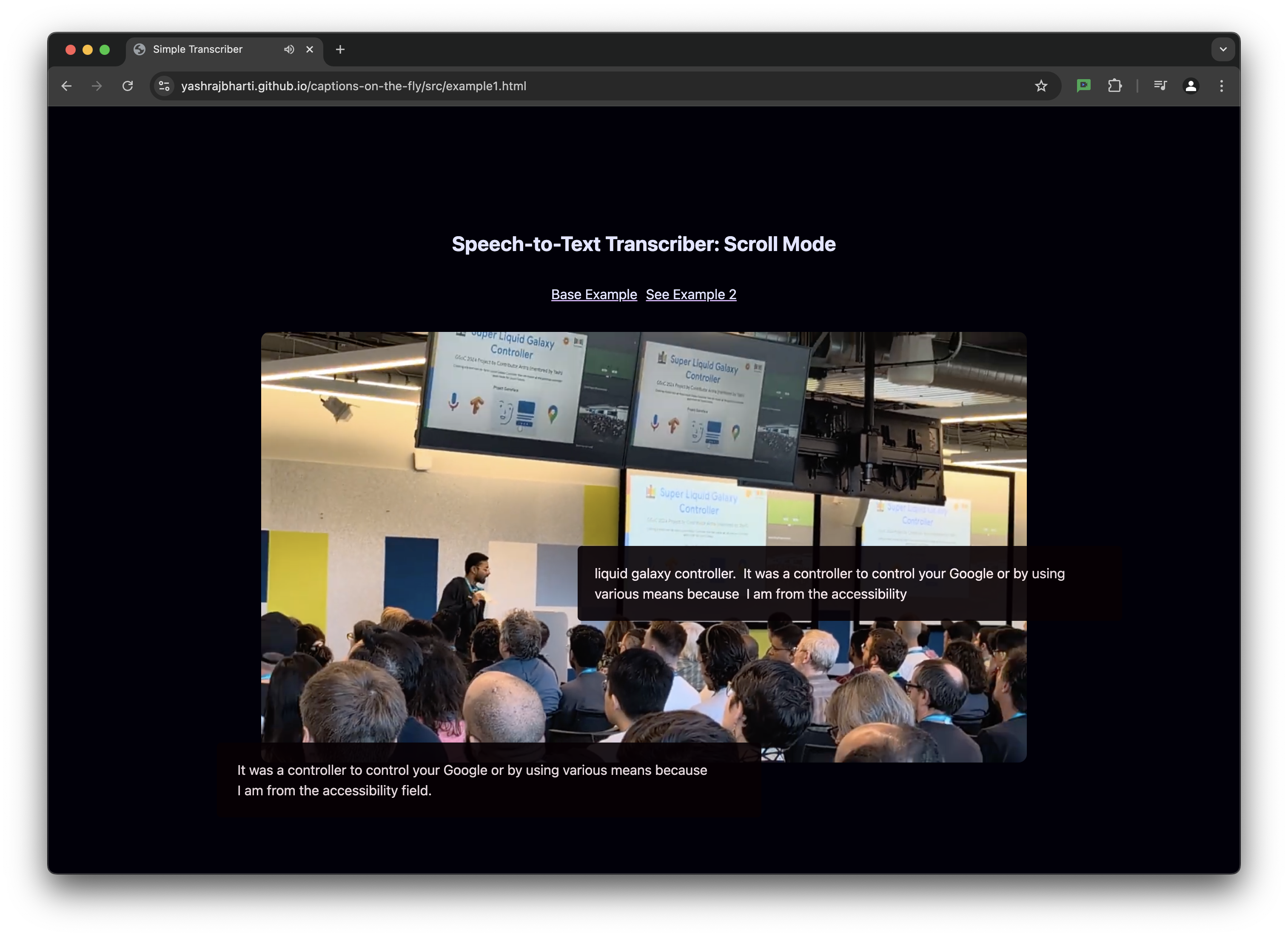

I started on ways to explore with a focus on UX and performance, how to make web captions available on the client side, directly through JS. Later when the Web Almanac report gave a logical reason to continue on this. I used client side AI, with hugging face and transformers JS, worked in writing the code and explainer, and acted as an independent contributor helping various vendors with auto-caption on the web. Chrome built-in gemini nano is working in a similar field, to bring multi-modalities to Chrome with a powerful client side AI, which is how I connected with Kenji, Dirk, Adam and Thomas from Google and got supported and helped with my explainer.

Only 0.5% of all web videos have captions, and many companies due to timeline and budget restrictions, leave

out on adding captions on their videos. The aim of auto-captions is to solve this problem for document

authors and help end users specially people with auditory special needs, who rely on captions.

Worked on Auto-captions transcribing videos with a client side AI using Hugging Face Whisper models, and

worked on writing explainers and Chrome extension to strengthen the work.

I have supplied the slides as a proof of the feedbacks received. I previously used the same slides in a Wednesday Web AI Chrome Meetup with Kenji Baheux from Google Chrome and got supported in ways he is in favor of bringing such multi-modalities to Chrome.

Using Google Chrome's Gemini Nano for STT

The explainer proposed the need for having Web captions auto-enabled, for helping businesses and content creators with captions that they can start with. It also proposed how the code for auto-captions Web Transcriber API will look like, based on existing Chrome AI APIs such as Prompt, Write, Rewrite and Language Translation APIs. The estimated usage will be in billions and can help businesses save millions through built-in AI solutions.

Accessibility Conformance Report WCAG SC

"Extensions are a great way to explore how something like this would work in practice."

-

Thomas Steiner, Google Chrome

The vanilla based solution allows one to upload and see captions in real-time. For the first time, AI model loading causes a ~40sec delay, and I am working on another Chrome ideated Web API solution to fix this problem.

There are yet two schools of thoughts in the explainer. One is the API helping document authors with captions to start with and they can be edited. Second is the direct use of captions when there is no <track> element. The latter might cause people to not manually edit captions even more, which can be bad. Yet this feature is needed in cases where we have a live video stream. Although this is answered in the explainer, more careful considerations could be provided while using the API.

Google Chrome will launch the APIs for testing and expression of support through the early preview program. I hope to keep engaged and help with this and related APIs till this happens, to make this happen.